Table of Contents

Introduction

Thinly Provisioned Logical volume: In our previous LVM guides we have completed with setting up a linear and stripped LVM. Further, Let’s start to explore more on LVM with thin provisioning.

Those who are not aware of the difference between Thick and Thin may have bit difficultly to understand this guide. To explain it in plain English,

Thick means it will occupy the whole space once we allocate something. Example a fat guy sitting on a couch and he fit it. No space left on couch.

But, The thin provision will be opposite to thick, Once we allocate something it won’t allocate the whole space, according to the growth it will occupy the allocated space in future. Example slim guy sitting on a couch and still couch have enough space to allocate something.

How Thinly Provisioned Volumes works

Now, let explain how thinly provisioned logical volume will work. For instance, your total size of the storage is 500 MB. But a guy from application team may request for 250 MB which is 50% of total storage size.

Whenever a request comes from Application team it will be huge in size. For example, it can be 250 MB or 500 MB. But the actual size they might not use more than 50% of provisioned space. In this place, they may argue to provide the actual requested size. In such a scenario, we can use thinly provisioned disks. Because thinly provisioned disk will visible as whole disk space (250 MB) to end-user and it won’t occupy the total size once we provision. Instead, it will grow till allocated size (250 MB) when the files are written to it. That means if they have application data around 25 MB then the actually occupied disk will be 25 MB thus the thin provisioned disk works.

Explanation with Scenario

You have four users and each one needs 100 MB of the disk for their data’s.

If we allocate this requirement from a thin pool then it will allocate virtually 100 MB for each User. But behind the scene, it will consume only the size of data’s writing to it. It means, User 1 may write only 50 MB of data, User 2 will write 75 MB of data thus remaining users user 3 and 4 may write not more than 100 MB of data so the total will be 225 MB of data.

But the actual disk size for each user is 100 MB and the total size of the storage is 500 MB so 100 MB x 4 Users = 400 MB. From this, we can assume that we are on a safe side because the user’s current usage is below the allocated size of 100 MB and the thin pool still have enough space to create more thin volumes.

What is Over Provisioning

Now, Maybe two more users need 100 MB each, but already allocated size crossed 400 MB. While we provision more 200 MB it will become a total of 600 MB. Providing more than what we have is called Over Provisioning.

In Simple words

Simply the concept of the thin provision is the possibility of provisioning more than the actual available size. It means out of total 500 MB you can create even 1 GB file system for a user and there will be no risk until unless the user not going to fill up the disk by vigorously writing to it.

Steps for creating thin volumes are as follows.

- Check for space in Volume Group.

- Create a thin pool

- Creating thin volumes from the thin pool.

- manipulate file system on thin volumes.

- Create the mount points to mount the filesystem.

- Verify the thin Pool and thin volume size.

Let’s start creating a thin pool and thin volumes as per the above explanation.

Creating a Thin Pool

To create a thin pool we need to have a basic Volume group.

[root@fileserver ~]# vgs

VG #PV #LV #SN Attr VSize VFree

centos 1 3 0 wz--n- <99.00g 4.00m

vg_thin 1 0 0 wz--n- 1020.00m 1020.00m

[root@fileserver ~]#While listing we have two volume groups, centos vg already used for OS. Volume group vg_thin will be used throughout our guide.

Then create a thin pool on top of the existing Volume group.

# lvcreate -L 500M --thinpool tp_pool vg_thinWe have created only 500 MB of thin pool remaining 500 MB left free under our VG for future use.

[root@fileserver ~]# lvcreate -L 500M --thinpool tp_pool vg_thin

Thin pool volume with chunk size 64.00 KiB can address at most 15.81 TiB of data.

Logical volume "tp_pool" created.

[root@fileserver ~]#Once created list the thin pool with lvs command.

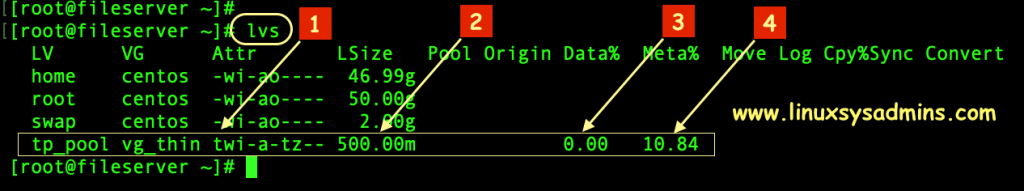

# lvsHere we need to understand a few of things from the output of lvs.

- The leading “t” means it’s a thin pool.

- Size of the thin pool.

- Current data usage of the thin pool.

- Metadata usage under this thin pool.

Creating Thin Volumes

Once a thin pool created then we can go ahead with creating the thin volumes from the available space. In our setup, Initially, we are creating with four numbers of thin volumes each one with 100 MB of size.

# lvcreate -V 100M --thin -n tp_lv_user1 vg_thin/tp_pool

# lvcreate -V 100M --thin -n tp_lv_user2 vg_thin/tp_pool

# lvcreate -V 100M --thin -n tp_lv_user3 vg_thin/tp_pool

# lvcreate -V 100M --thin -n tp_lv_user4 vg_thin/tp_poolOutput for reference

[root@fileserver ~]# lvcreate -V 100M --thin -n tp_lv_user1 vg_thin/tp_pool

Logical volume "tp_lv_user1" created.

[root@fileserver ~]# lvcreate -V 100M --thin -n tp_lv_user2 vg_thin/tp_pool

Logical volume "tp_lv_user2" created.

[root@fileserver ~]# lvcreate -V 100M --thin -n tp_lv_user3 vg_thin/tp_pool

Logical volume "tp_lv_user3" created.

[root@fileserver ~]# lvcreate -V 100M --thin -n tp_lv_user4 vg_thin/tp_pool

Logical volume "tp_lv_user4" created.

[root@fileserver ~]#To get more detailed information about the thin pool and thin volumes we can run lvdisplay on the related Volume Group. The output will be a bit long with more detailed information.

If you have noticed Allocated pool data and Mapped Size from below output it will be 0.00% because none of the data yet copied under this thin pool or volumes. The same thing we can notice for all thin volumes as well.

[root@fileserver ~]# lvdisplay vg_thin

--- Logical volume ---

LV Name tp_pool

VG Name vg_thin

LV UUID mQL6BA-8kfl-LzLw-7npA-F5Zg-ntHw-qfNilc

LV Write Access read/write

LV Creation host, time fileserver, 2019-08-30 03:52:49 +0300

LV Pool metadata tp_pool_tmeta

LV Pool data tp_pool_tdata

LV Status available

# open 5

LV Size 500.00 MiB

Allocated pool data 0.00%

Allocated metadata 11.23%

Current LE 125

Segments 1

Allocation inherit

Read ahead sectors auto

currently set to 8192

Block device 253:5

--- Logical volume ---

LV Path /dev/vg_thin/tp_lv_user1

LV Name tp_lv_user1

VG Name vg_thin

LV UUID iVUkmG-c4qA-dLSe-XIG3-zAUF-UKFT-n5tGAu

LV Write Access read/write

LV Creation host, time fileserver, 2019-08-30 04:12:38 +0300

LV Pool name tp_pool

LV Status available

open 0

LV Size 100.00 MiB

Mapped size 0.00%

Current LE 25

Segments 1

Allocation inherit

Read ahead sectors auto

currently set to 8192

Block device 253:7

--- Logical volume ---

LV Path /dev/vg_thin/tp_lv_user2

LV Name tp_lv_user2

VG Name vg_thin

LV UUID 4NKRLc-lwA8-cM1j-g791-g2Kj-iP54-GfWOI6

LV Write Access read/write

LV Creation host, time fileserver, 2019-08-30 04:12:39 +0300

LV Pool name tp_pool

LV Status available

open 0

LV Size 100.00 MiB

Mapped size 0.00%

Current LE 25

Segments 1

Allocation inherit

Read ahead sectors auto

currently set to 8192

Block device 253:8

--- Logical volume ---

LV Path /dev/vg_thin/tp_lv_user3

LV Name tp_lv_user3

VG Name vg_thin

LV UUID avO0OQ-lHVI-dPXK-vjuD-Jmlk-sRNq-X5Lhsj

LV Write Access read/write

LV Creation host, time fileserver, 2019-08-30 04:12:39 +0300

LV Pool name tp_pool

LV Status available

open 0

LV Size 100.00 MiB

Mapped size 0.00%

Current LE 25

Segments 1

Allocation inherit

Read ahead sectors auto

currently set to 8192

Block device 253:9

--- Logical volume ---

LV Path /dev/vg_thin/tp_lv_user4

LV Name tp_lv_user4

VG Name vg_thin

LV UUID Vib4RM-naEd-su52-tY9h-WZAE-6Pot-2EXPHk

LV Write Access read/write

LV Creation host, time fileserver, 2019-08-30 04:12:40 +0300

LV Pool name tp_pool

LV Status available

open 0

LV Size 100.00 MiB

Mapped size 0.00%

Current LE 25

Segments 1

Allocation inherit

Read ahead sectors auto

currently set to 8192

Block device 253:10

[root@fileserver ~]#Once you have copied some file rerun this command and notice the Allocated pool data and Mapped Size you should get some values.

FileSystem and Mounting

Create the filesystem on those thin volumes.

# mkfs.xfs /dev/mapper/vg_thin-tp_lv_user1

# mkfs.xfs /dev/mapper/vg_thin-tp_lv_user2

# mkfs.xfs /dev/mapper/vg_thin-tp_lv_user3

# mkfs.xfs /dev/mapper/vg_thin-tp_lv_user4Create directories to mount the filesystems.

# mkdir /mnt/user{1..4}/

# ls -lthr /mnt/Mount the file system under created mount points.

# mount /dev/mapper/vg_thin-tp_lv_user1 /mnt/user1/

# mount /dev/mapper/vg_thin-tp_lv_user2 /mnt/user2/

# mount /dev/mapper/vg_thin-tp_lv_user3 /mnt/user3/

# mount /dev/mapper/vg_thin-tp_lv_user4 /mnt/user4/Verify the size, we should get all with the same size and none of data’s is residing under new mount points.

[root@fileserver ~]# df -hP /mnt/user*

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_thin-tp_lv_user1 97M 5.3M 92M 6% /mnt/user1

/dev/mapper/vg_thin-tp_lv_user2 97M 5.3M 92M 6% /mnt/user2

/dev/mapper/vg_thin-tp_lv_user3 97M 5.3M 92M 6% /mnt/user3

/dev/mapper/vg_thin-tp_lv_user4 97M 5.3M 92M 6% /mnt/user4

[root@fileserver ~]#Try to copy some files and fill with the random size, Now once again verify.

[root@fileserver ~]# df -hP /mnt/user*

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_thin-tp_lv_user1 97M 56M 42M 57% /mnt/user1

/dev/mapper/vg_thin-tp_lv_user2 97M 81M 17M 83% /mnt/user2

/dev/mapper/vg_thin-tp_lv_user3 97M 46M 52M 47% /mnt/user3

/dev/mapper/vg_thin-tp_lv_user4 97M 66M 32M 68% /mnt/user4

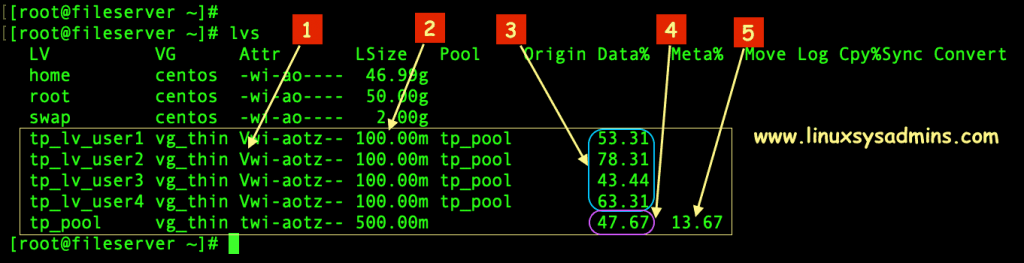

[root@fileserver ~]#Now run lvs command to verify the pool and volume utilization.

# lvsThe values have been changed after we copied a few files under all four mount points. We can notice the thin pool utilization have 47.67% so it’s almost half of pool size has been utilized.

- The leading V means its a Volume or thin volume.

- Size of the Thin volume.

- Data consumption under the thin volume.

- The total size of the thin pool usage.

- Metadata usage under the thin pool.

Over Provisioning

Without Over-Provisioning Protection

Create two more 100 MB for new users. While we create more thin volumes from the same thin pool than it’s allocated size it will have more risk and throw a few warning to show you as overprovisioning.

# lvcreate -V 100M --thin -n tp_lv_user5 vg_thin/tp_pool

# lvcreate -V 100M --thin -n tp_lv_user6 vg_thin/tp_poolOutput for reference

[root@fileserver ~]# lvcreate -V 100M --thin -n tp_lv_user6 vg_thin/tp_pool

WARNING: Sum of all thin volume sizes (600.00 MiB) exceeds the size of thin pool vg_thin/tp_pool and the amount of free space in volume group (512.00 MiB).

WARNING: You have not turned on protection against thin pools running out of space.

WARNING: Set activation/thin_pool_autoextend_threshold below 100 to trigger automatic extension of thin pools before they get full.

Logical volume "tp_lv_user6" created.Newly created thin volumes.

[root@fileserver ~]# df -hP /mnt/user*

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_thin-tp_lv_user1 97M 56M 42M 57% /mnt/user1

/dev/mapper/vg_thin-tp_lv_user2 97M 81M 17M 83% /mnt/user2

/dev/mapper/vg_thin-tp_lv_user3 97M 46M 52M 47% /mnt/user3

/dev/mapper/vg_thin-tp_lv_user4 97M 66M 32M 68% /mnt/user4

/dev/mapper/vg_thin-tp_lv_user5 97M 5.3M 92M 6% /mnt/user5

/dev/mapper/vg_thin-tp_lv_user6 97M 5.3M 92M 6% /mnt/user6

[root@fileserver ~]#Copy some files to newly created thin volumes.

[root@fileserver ~]# df -hP /mnt/user*

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_thin-tp_lv_user1 97M 56M 42M 57% /mnt/user1

/dev/mapper/vg_thin-tp_lv_user2 97M 81M 17M 83% /mnt/user2

/dev/mapper/vg_thin-tp_lv_user3 97M 46M 52M 47% /mnt/user3

/dev/mapper/vg_thin-tp_lv_user4 97M 66M 32M 68% /mnt/user4

/dev/mapper/vg_thin-tp_lv_user5 97M 61M 37M 63% /mnt/user5

/dev/mapper/vg_thin-tp_lv_user6 97M 16M 82M 16% /mnt/user6

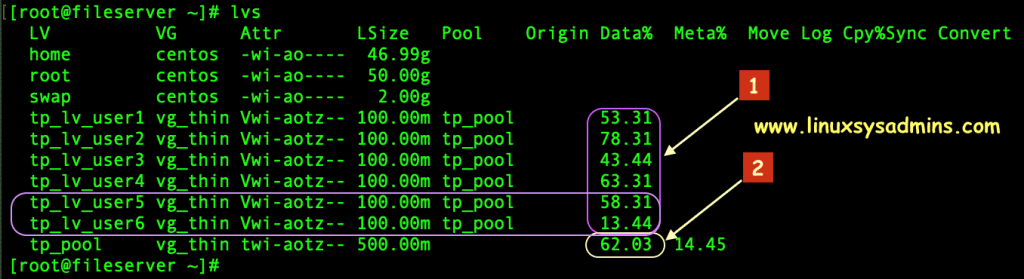

[root@fileserver ~]#Then verify the thin pool utilization by running lvs command. Still, we can notice the total utilization is 62.03%.

From the above snip, we can understand that over-provisioning is something we can provide more than what we actually have. The steps we followed have more risk of losing data if you are not keeping a (monitoring) eye on it.

With Over-Provisioning Protection

Configure Over-Provisioning protection

We can protect the thin pools while running out of space by configuring lvm.conf.

# vim /etc/lvm/lvm.confAuto-extend a thin pool when its usage exceeds this percentage, Changing this to 100 disables the automatic extension. The accepted minimum value is 50.

thin_pool_autoextend_threshold = 80While over-provisioning we should not face any issue, By auto extending a thin pool adds this percentage extra space. The amount of additional space added to a thin pool is this percentage of its current size.

thin_pool_autoextend_percent = 20For example, Using 80% auto-extend threshold and 20% auto-extend size, when a 500 MB thin pool exceeds 400M, it is extended to 600 MB., and when it exceeds 480M, it is extended to (+20%) 800 MB.

Create Thin Volumes with Over-Provisioning Protection

This is the size of Volume group free space we have before creating more thin volumes.

[root@fileserver ~]# vgs

VG #PV #LV #SN Attr VSize VFree

centos 1 3 0 wz--n- <99.00g 4.00m

vg_thin 1 7 0 wz--n- 1020.00m 512.00m

[root@fileserver ~]#Let’s create two more thin volumes.

[root@fileserver ~]# lvcreate -V 100M --thin -n tp_lv_user5 vg_thin/tp_pool

Logical volume "tp_lv_user5" created.

[root@fileserver ~]# lvcreate -V 100M --thin -n tp_lv_user6 vg_thin/tp_pool

WARNING: Sum of all thin volume sizes (600.00 MiB) exceeds the size of thin pool vg_thin/tp_pool and the amount of free space in volume group (512.00 MiB).

Logical volume "tp_lv_user6" created.

[root@fileserver ~]#And create the filesystem on those new thin volumes.

# mkfs.xfs /dev/mapper/vg_thin-tp_lv_user5

# mkfs.xfs /dev/mapper/vg_thin-tp_lv_user6List and verify the current thin pool and thin volumes. As usual, Thin pool remains the same size and newly created thin volume not yet filled with data’s.

[root@fileserver ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

home centos -wi-ao---- 46.99g

root centos -wi-ao---- 50.00g

swap centos -wi-ao---- 2.00g

tp_lv_user1 vg_thin Vwi-aotz-- 100.00m tp_pool 53.31

tp_lv_user2 vg_thin Vwi-aotz-- 100.00m tp_pool 78.31

tp_lv_user3 vg_thin Vwi-aotz-- 100.00m tp_pool 43.44

tp_lv_user4 vg_thin Vwi-aotz-- 100.00m tp_pool 63.31

tp_lv_user5 vg_thin Vwi-a-tz-- 100.00m tp_pool 0.00

tp_lv_user6 vg_thin Vwi-a-tz-- 100.00m tp_pool 0.00

tp_pool vg_thin twi-aotz-- 500.00m 47.67 13.87Fill with some files to the newly created thin volumes.

[root@fileserver ~]# df -hP

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos-root 50G 1.4G 49G 3% /

devtmpfs 411M 0 411M 0% /dev

tmpfs 423M 0 423M 0% /dev/shm

tmpfs 423M 6.8M 416M 2% /run

tmpfs 423M 0 423M 0% /sys/fs/cgroup

/dev/sda1 1014M 186M 829M 19% /boot

/dev/mapper/centos-home 47G 33M 47G 1% /home

tmpfs 85M 0 85M 0% /run/user/0

/dev/mapper/vg_thin-tp_lv_user1 97M 56M 42M 57% /mnt/user1

/dev/mapper/vg_thin-tp_lv_user2 97M 81M 17M 83% /mnt/user2

/dev/mapper/vg_thin-tp_lv_user3 97M 46M 52M 47% /mnt/user3

/dev/mapper/vg_thin-tp_lv_user4 97M 66M 32M 68% /mnt/user4

/dev/mapper/vg_thin-tp_lv_user5 97M 97M 800K 100% /mnt/user5

/dev/mapper/vg_thin-tp_lv_user6 97M 91M 6.7M 94% /mnt/user6Once we fill with some files, The growth of thin pool utilization increases and reached 80%. So our thin pool size will be extended +20% automatically from the available Volume Group free space. Run vgs to confirm the VG size now.

[root@fileserver ~]# vgs

VG #PV #LV #SN Attr VSize VFree

centos 1 3 0 wz--n- <99.00g 4.00m

vg_thin 1 7 0 wz--n- 1020.00m 412.00m

[root@fileserver ~]#Now check the size of the thin pool it should be higher than before. Moreover, these changes automatically kicked in to protect the over-provisioning. Thus the protected over-provisioning work on a Thinly Provisioned Logical volume.

[root@fileserver ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

home centos -wi-ao---- 46.99g

root centos -wi-ao---- 50.00g

swap centos -wi-ao---- 2.00g

tp_lv_user1 vg_thin Vwi-aotz-- 100.00m tp_pool 53.31

tp_lv_user2 vg_thin Vwi-aotz-- 100.00m tp_pool 78.31

tp_lv_user3 vg_thin Vwi-aotz-- 100.00m tp_pool 43.44

tp_lv_user4 vg_thin Vwi-aotz-- 100.00m tp_pool 63.31

tp_lv_user5 vg_thin Vwi-aotz-- 100.00m tp_pool 94.31

tp_lv_user6 vg_thin Vwi-aotz-- 100.00m tp_pool 88.31

tp_pool vg_thin twi-aotz-- 600.00m 70.17 15.72

[root@fileserver ~]#How to Extend a Thin Pool

There are two steps we need to follow while extending a thin pool

- Extend the thin pool’s metadata

- Then extend the Thin Pool.

To extend a thin pool we should not go ahead straight away to extend the thin pool. First, check the size of existing metadata usage by running lvs command with “-a” option.

[root@fileserver ~]# lvs -a

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

home centos -wi-ao---- 46.99g

root centos -wi-ao---- 50.00g

swap centos -wi-ao---- 2.00g

[lvol0_pmspare] vg_thin ewi------- 4.00m

tp_lv_user1 vg_thin Vwi-a-tz-- 100.00m tp_pool 59.31

tp_lv_user2 vg_thin Vwi-a-tz-- 100.00m tp_pool 95.12

tp_lv_user3 vg_thin Vwi-a-tz-- 100.00m tp_pool 43.44

tp_lv_user4 vg_thin Vwi-a-tz-- 100.00m tp_pool 95.06

tp_lv_user5 vg_thin Vwi-a-tz-- 100.00m tp_pool 58.31

tp_lv_user6 vg_thin Vwi-a-tz-- 100.00m tp_pool 95.06

tp_pool vg_thin twi-aotz-- 600.00m 74.39 16.11

[tp_pool_tdata] vg_thin Twi-ao---- 600.00m

[tp_pool_tmeta] vg_thin ewi-ao---- 4.00m

[root@fileserver ~]#Here, we can notice metadata size at the last line.

Extend the Metadata Size.

The current usage of metadata is just 4 MB. Let us add more + 4MB from the current size.

# lvextend --poolmetadatasize +4M vg_thin/tp_poolLook at the below output for metadata changes from 4 MB to 8 MB.

[root@fileserver ~]# lvextend --poolmetadatasize +4M vg_thin/tp_pool

Size of logical volume vg_thin/tp_pool_tmeta changed from 4.00 MiB (1 extents) to 8.00 MiB (2 extents).

Logical volume vg_thin/tp_pool_tmeta successfully resized.

[root@fileserver ~]#Once again run lvs command with the option to verify the metadata.

[root@fileserver ~]# lvs -a

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

home centos -wi-ao---- 46.99g

root centos -wi-ao---- 50.00g

swap centos -wi-ao---- 2.00g

[lvol0_pmspare] vg_thin ewi------- 4.00m

tp_lv_user1 vg_thin Vwi-a-tz-- 100.00m tp_pool 59.31

tp_lv_user2 vg_thin Vwi-a-tz-- 100.00m tp_pool 95.12

tp_lv_user3 vg_thin Vwi-a-tz-- 100.00m tp_pool 43.44

tp_lv_user4 vg_thin Vwi-a-tz-- 100.00m tp_pool 95.06

tp_lv_user5 vg_thin Vwi-a-tz-- 100.00m tp_pool 58.31

tp_lv_user6 vg_thin Vwi-a-tz-- 100.00m tp_pool 95.06

tp_pool vg_thin twi-aotz-- 600.00m 74.39 13.04

[tp_pool_tdata] vg_thin Twi-ao---- 600.00m

[tp_pool_tmeta] vg_thin ewi-ao---- 8.00m

[root@fileserver ~]#After the extension, we should see 8 MB as metadata size.

Extend the Thin Pool size.

Once completed with extending the metadata start to extend the thin pool to your desired size available from the volume group. We are trying with extending from current size 600 MB to 700 MB.

# lvextend -L +100M /dev/vg_thin/tp_poolFor your reference

[root@fileserver ~]# lvextend -L +100M /dev/vg_thin/tp_pool

Size of logical volume vg_thin/tp_pool_tdata changed from 600.00 MiB (150 extents) to 700.00 MiB (175 extents).

Logical volume vg_thin/tp_pool_tdata successfully resized.

[root@fileserver ~]#This is the current size of the thin pool after extension.

[root@fileserver ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

home centos -wi-ao---- 46.99g

root centos -wi-ao---- 50.00g

swap centos -wi-ao---- 2.00g

tp_lv_user1 vg_thin Vwi-a-tz-- 100.00m tp_pool 59.31

tp_lv_user2 vg_thin Vwi-a-tz-- 100.00m tp_pool 95.12

tp_lv_user3 vg_thin Vwi-a-tz-- 100.00m tp_pool 43.44

tp_lv_user4 vg_thin Vwi-a-tz-- 100.00m tp_pool 95.06

tp_lv_user5 vg_thin Vwi-a-tz-- 100.00m tp_pool 58.31

tp_lv_user6 vg_thin Vwi-a-tz-- 100.00m tp_pool 95.06

tp_pool vg_thin twi-aotz-- 700.00m 63.76 13.04

[root@fileserver ~]#If you need more space in the volume group its usual step as mentioned in this guide.

How to Remove a Thin Pool

To remove a thin pool first we need to unmount all the filesystem, then remove all thin volumes, finally remove the thin pool.

# lvremove -y /dev/vg_thin/tp_lv_user[1-6]We are removing all thin volumes in a single go.

[root@fileserver ~]# lvremove -y /dev/vg_thin/tp_lv_user[1-6]

Logical volume "tp_lv_user1" successfully removed

Logical volume "tp_lv_user2" successfully removed

Logical volume "tp_lv_user3" successfully removed

Logical volume "tp_lv_user4" successfully removed

Logical volume "tp_lv_user5" successfully removed

Logical volume "tp_lv_user6" successfully removed

[root@fileserver ~]#By following, remove the thin pool using lvremove.

# lvremove /dev/vg_thin/tp_pool If you have a number of thin pools make sure to remove the correct one.

[root@fileserver ~]# lvremove /dev/vg_thin/tp_pool

Do you really want to remove active logical volume vg_thin/tp_pool? [y/n]: y

Logical volume "tp_pool" successfully removed

[root@fileserver ~]#Finally, verify running lvs and vgs commands.

[root@fileserver ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

home centos -wi-ao---- 46.99g

root centos -wi-ao---- 50.00g

swap centos -wi-ao---- 2.00g

[root@fileserver ~]#

[root@fileserver ~]# vgs

VG #PV #LV #SN Attr VSize VFree

centos 1 3 0 wz--n- <99.00g 4.00m

vg_thin 1 0 0 wz--n- 1020.00m 1020.00m

[root@fileserver ~]#That’s it, we have successfully completed with working on Thinly Provisioned Logical volume.

Conclusion

Logical volume management is one of the coolest concepts in Linux. The Thinly Provisioned Logical volume is one of the advanced storage feature supported under LVM. Subscribe to our newsletter for more guides and stay closer to us. Your feedbacks are most welcome through below comment section.

Great guide. So, only the thin pool with be auto-extended, not the thin volumes. We have to manually extend the thin volumes, if we need to give more space. Is that correct ?